There are a lot of technical ways to achieve an updated database (to many called a model) in Azure Analysis Services, one of them is by using Azure Automation which allows you to orchestrate processes in Azure amongst other things.

One of the components of Azure Automation is the concept of a Runbook. A Runbook contains some sort of a script i.e. Powershell or graphical representation, which can be scheduled or activated by a Webhook. A webhook is an HTTP endpoint, which means you can activate the runbook from almost any service, application and/or device. In fact, if you can do a POST to the HTTP endpoint you are good to go.

So really, this comes down to how you create the runbook, because once created, you can think up a gazillion scenarios to invoke the script. Could be Power Apps, could be Power Automate, could be Azure Data Factory or a completely different process where you need to kick of an automation script.

To complete this guide, you will need the following services created:

- Azure Automation Account (quick guide here)

- Azure Analysis Services (quick guide here)

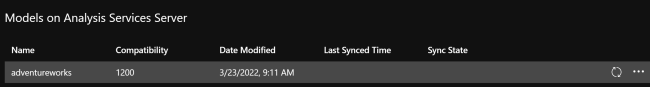

For the Azure Analysis Services Model we can simply use a sample data option, provided by creating a new Model in Azure Analysis Services. This allows you to select a sample data which creates an Adventure Works sample model.

Now that we have our Automation Account and model ready, we can go ahead and stitch everything together.

In order for us to run this unattended, we will be needing an App Registration in our Azure Active Directory (make sure it’s in the same tenant). Microsoft has a guide here. You will need to record the Application ID (Client ID) and also the Secret you have created. With this information, our first step is to create our Automation Account Credentials in the Shared Resource section of the Automation Account.

Give the credentials a meaningful name (1), maybe even be bold and name it the same as you did when registering the App 😉. (2) use the Application ID (Client ID) as user name and finally the Secret as Password (3) – repeat for confirmation. Once these credentials have been setup, we can reference them from our Runbook, which is the next step.

Next step is to generate the Powershell script that we will schedule or call from the outside via a webhook.

This is done by creating a new Runbook, in the Automation Account.

Create a new Runbook, select a meaningful name, select the Runbook Type which in our case is Powershell. Lastly provide the correct version of Powershell you will be using – make sure the correct libraries are loaded, see how to manage the modules here.

And now to the actual Powershell code.

We will begin by defining the parameters for the Runbook, which are DatabaseName, AnalysisServer and RefreshType. All three combined makes a good starting point for a dynamic way to expose the option to refresh a model in Azure Analysis Services. The code looks like this:

param

(

[Parameter (Mandatory = $false)]

[String] $DatabaseName,

[Parameter (Mandatory = $false)]

[String] $AnalysisServer,

[Parameter (Mandatory = $false)]

[String] $RefreshType

)This way, we can from the outside tell the Runbook which database on which server to refresh.

Then we will assign the tenant id to a variable (this could arguably be set from a Runbook variable or parameter) and then we will assign the credentials we just created to another variable. Please replace #!#CredentialName#!# with the name that you have created the credentials under.

As soon as we have the credentials assigned, we can log in to the Azure Analysis Services instance and perform the ProcessASDatabase method. Note that the refresh type has to match the definition below.

# Get the values stored in the Assets

$TenantId = "xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx"

$Credential = Get-AutomationPSCredential -Name "#!#CredentialName#!#"

# Log in to Azure Analysis Services using the Azure AD Service Principal

Add-AzureAnalysisServicesAccount `

-Credential $Credential `

-ServicePrincipal `

-TenantId $TenantId `

-RolloutEnvironment "northeurope.asazure.windows.net"

# Perform a Process Full on the Azure Analysis Services database

Invoke-ProcessASDatabase `

-Server $AnalysisServer `

-DatabaseName $DatabaseName `

-RefreshType $RefreshTypeRefresh Type definitions (see detailed description here):

ProcessFull, ProcessAdd, ProcessUpdate, ProcessIndexes, ProcessData, ProcessDefault, ProcessClear, ProcessStructure, ProcessClearStructureOnly, ProcessScriptCache, ProcessRecalc, ProcessDefragOnce we are at this stage, we can publish and/or test our Runbook by pressing Publish or opening the Test Pane. Note: You cannot run a Runbook that is not published.

When published, we have several options to invoke the Runbook, either by Schedule or by Webhook.

The Webhook creates a URL which we can use in other applications to invoke the Runbook. The parameters need to be assigned once the Webhook is defined. This means you can have a unique URL for each set of parameters you have.

Note, you need to copy and store the URL generated when creating the Webhook – as the warning says, you cannot go back and retrieve it.

Last step is to define the parameter values. Assign the name of the Database and the Server as well as the Refresh Type you desire.

After the parameter assignment, you should end up with a final wizard page looking like this:

Once we click Create, the Webhook is live and usable.

I hope this post will be of use, or at least of inspiration to someone out there, on the great things possible in Azure.

![]()